|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

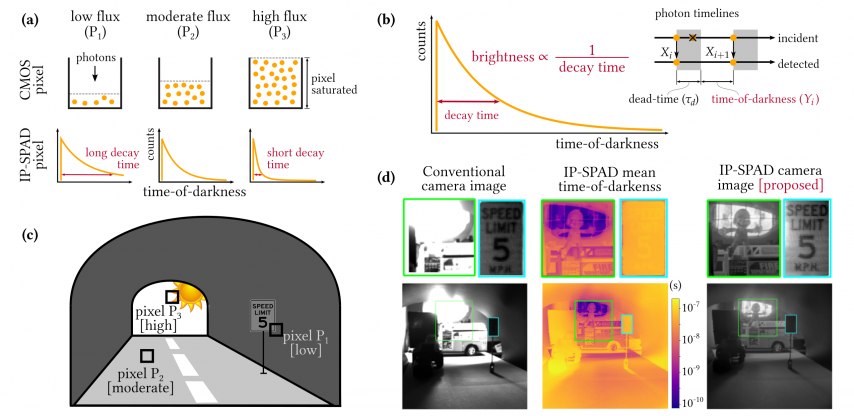

Inter-photon timing measurements captured by a passive single-photon sensitive camera enable unprecedented dynamic range Digital camera pixels capture images by converting incident light energy into an analog electrical current, and then digitizing it into a binary representation. This direct measurement method, while conceptually simple, suffers from limited dynamic range: electronic noise dominates under low illumination, and the pixel’s finite full-well capacity causes saturation under bright illumination. Here we show that inter-photon timing information captured by a dead-time-limited single-photon detector can be used to estimate scene intensity over a much higher range of brightness levels. We experimentally demonstrate imaging scenes with a dynamic range of over ten million to one. The proposed techniques, aided by the emergence of single-photon sensors such as single-photon avalanche diodes (SPADs) with picosecond timing resolution, will have implications for a wide range of imaging applications: robotics, consumer photography, astronomy, microscopy and biomedical imaging. |

|

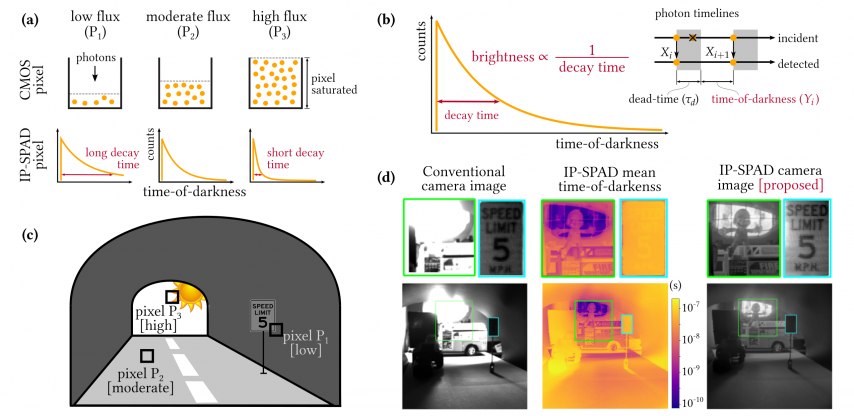

There is a non-linear relationship between the number of incident and detected photons for a dead-time limited SPAD pixel. If we rely solely on discrete photon counts, at extremely high flux, we suffer from a quantization artifact: a large range of incident flux values may map to the same photon count. But, if we measure the inter-photon timing directly, we can distinguish between two similar flux values. Higher flux values result in smaller average inter-photon timing even if the resulting photon counts are the same. |

|

|

|

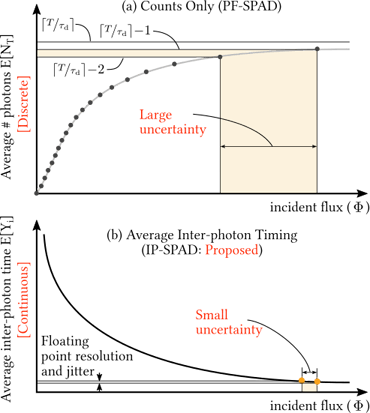

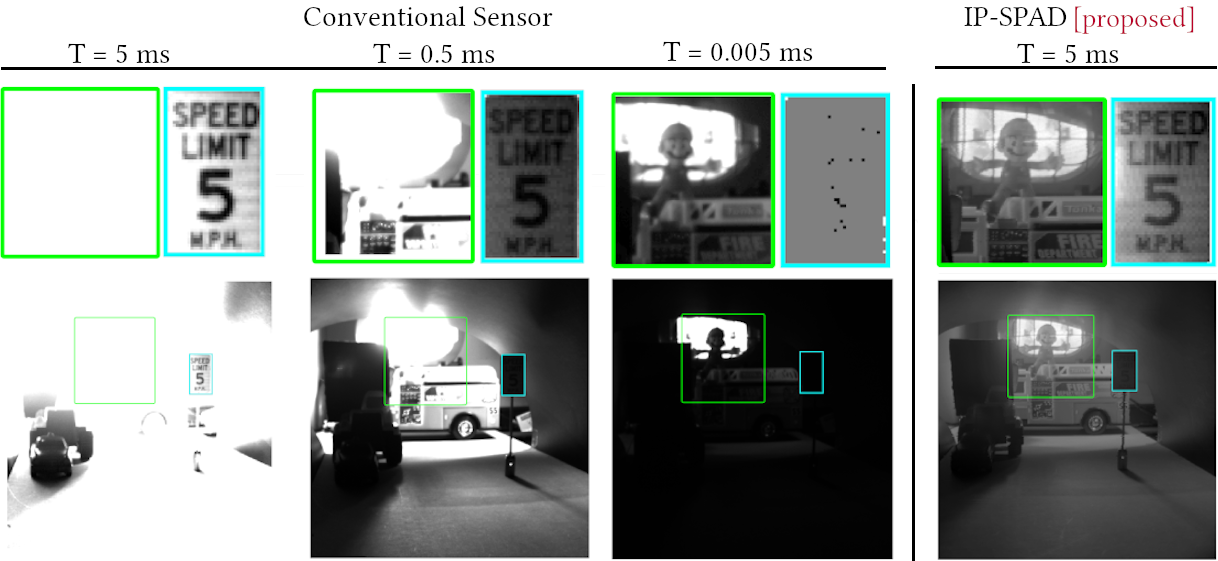

Conventional Sensor vs. IP-SPAD. This tabletop scene has over a million-to-one dynamic range. A conventional monochrome CMOS camera cannot reliably capture this in a single exposure. Our inter-photon timing method (IP-SPAD) captures this in a single shot, including fine details on the bright halogen lamp and text in the dark tunnel. |

|

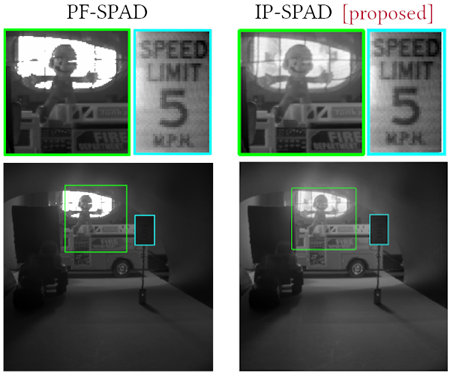

SPAD Counts vs Timing. If we only use photon counts (PF-SPAD) we still obtain higher dynamic range than a conventional CMOS camera, but notice that the extremely bright pixels around the halogen lamp suffer from "soft saturation" artifacts. The photon flux at these pixels is so high that it results in the same (high) photon count. However, using the small variations in average inter-photon timing the IP-SPAD method can discern different brightness levels in this extremely high flux regime. Observe the fine details around the halogen lamp. |

|

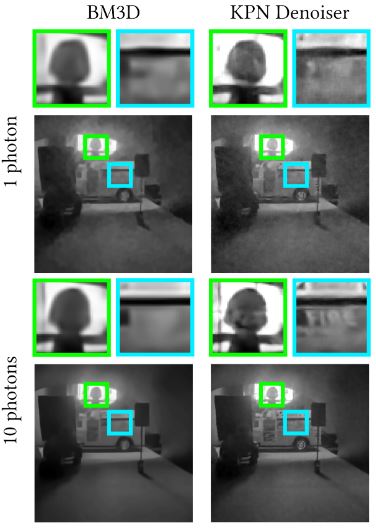

Photon Timing in Low Count Regime. Photon timing information can enable image reconstruction with extremely low photon counts, as low as 1 and 10 photons per pixel. Two denoising methods are shown here: an off-the-shelf BM3D denoiser and a kernel-prediction-network (KPN) based neural network denoiser. Quite remarkably, with as few as 10 photons per pixel, details such as facial features and text on the fire truck are discernible. |

|

How does a conventional camera measure image pixels? A conventional camera pixel is like a “light bucket”. It collects photons for a fixed exposure time. The bucket fills up proportionally to the scene brightness. The pixel digitizes the charge level in the bucket which is read-out in a digital (e.g. 8-bit or 16-bit) format after analog-to-digital conversion. |

How does your method measure image pixels? Conventional cameras measure scene brightness by capturing photon counts over a fixed exposure time. In contrast, we use a single-photon sensitive image sensor called single-photon avalanche diode (SPAD) which captures precise inter-photon timing information with sub-nano-second resolution. Intuitively, the higher the brightness, the smaller the measured inter-photon time. |

How does the SPAD pixel respond to varying brightness levels? Unlike conventional image sensor pixels that produce a signal that is linearly proportional to the scene brightness, a SPAD pixel has a non-linear response curve. The scene pixel brightness is inversely proportional to the average inter-photon times measured by the SPAD pixel. At extremely brightness levels, this response curve flattens out, approaching an asymptotic saturation limit. |

Why does photon timing information perform better than using photon counts?? Brightness estimated from just photon counts over a fixed exposure time is indeed a good approximation for the true inter-photon timing in low-to-moderate flux values. However, the key difference is at high flux. This is due to the following reason: If we rely just on discrete photon counts, at extremely high flux, we suffer from a quantization artifact. This is because there is a non-linear relationship between the number of incident and detected photons for a dead-time limited SPAD. Therefore, a large range of incident flux values may map to the same photon count. On the other hand, if we can measure the inter-photon timing directly, we can distinguish between two similar flux values, even if they give the same photon count: the higher flux results in smaller average inter-photon timing and vice versa. |

What are some limitations of an IP-SPAD? Our previous work on passive free-running SPAD (PF-SPAD) imaging proposes a photon flux estimator that only uses photon counts. Due to its non-linear response curve, the counts-based PF-SPAD brightness estimator suffers from a quantization effect in extremely high brightness settings. The new IP-SPAD method overcomes this limitation by using precise photon timing information which, unlike photon counts, is inherently continuous-valued. This extends the dynamic range of the IP-SPAD well beyond the quantization limit of a PF-SPAD. |

Why does photon timing information perform better than using photon counts?? Although our IP-SPAD method can give extremely high dynamic range, it is not infinite because in reality the photon timestamps cannot be measured with infinite precision. Due to internal sources of noise and jitter, a SPAD can only measure photon timing with ~100’s of picosecond resolution. As a result, we observe quantization artifacts if the true brightness is so high that the inter-photon times vary on time-scales smaller than this resolution. Our method is also quite sensitive to variations in the pixel’s dead-time and sensitivity. Moreover, our experiment proof-of-concept is limited to just one camera pixel. Scaling this to large arrays will introduce additional design challenges and noise sources. |

Related WorkHigh Flux Passive Imaging with Single-Photon Sensors, CVPR 2019. [Website] Motion Adaptive Deblurring With Single-Photon Cameras, WACV 2021. [Paper] Quanta Burst Photography, SIGGRAPH 2020. [Website] Asynchronous Single-Photon 3D Imaging, ICCV 2019. [Paper] Photon-Flooded Single-Photon 3D Cameras, CVPR 2019. [Paper] |

AcknowledgementsThis research was supported in part by DARPA HR0011-16-C-0025, DoE NNSA DE-NA0003921, NSF GRFP DGE-1747503, NSF CAREER 1846884 and 1943149, and Wisconsin Alumni Research Foundation. |